Designing software with AI

Part 2 of a practical example of using AI for coding

This is part 2 of a multi-part series:

In Part 1, we discussed setup and best practices and answered the question: “Will AI replace software developers?”.

In Part 2, we will design a solution and create a design document.

Recap: The Scenario

AI is pretty great at leet code examples. I am not. Also, I said this would be a practical example. Leet code is not indicative of real-life work.

(Anyone picking up that leet code and I are not on good terms?)

For practical examples, it helps to use something similar to real-life work.

Coding Challenges is an excellent resource for building real-world applications.

A URL Shortener Challenge looks like a good, practical example to work with.

Design

I’ve formulated a prompt that lays out the roles, goals, and process and establishes the AI as a collaborator. I’ve also included the Design Document template in the prompt to help guide the end result.

I used ChatGPT 3.5 for this portion. I also used ChatGPT 4 as a test run, and it yielded better results, but I want to give you an idea of what 3.5 is capable of.

I started off by prompting ChatGPT:

You and I are software engineers. We will design software together. Our goal is to design the best possible application to satisfy the business requirements.

Ask me clarifying questions as needed.

We will follow this process:

1. Discuss problem context

2. Discuss use cases

3. Discuss functional requirements

4. Discuss non-functional requirements (traffic, SLA, security, privacy, etc.)

5. Discuss data model

6. Discuss implementation details

7. Discuss observability (monitoring, logging)

8. Discuss any remaining design sections.

9. Finalize design.

At the end of each step in the process, we will update the design document.

Here is the design document template:

# {Design Name}

## Context

{Describe the problem}

## Functional Requirements

- {requirement 1}

## Non-functional requirements

- {requirement 1}

## Use Cases

{Business use cases and user experience}

- {use case 1}

## Exclusions

{Things we are explicitly not doing}

- {exclusion 1}

## Implementation Details

- {detail 1}

### {Topic}

- {detail 1}

### Data Model

{data model overview}

#### {model 1}

{model description}

### Input Validation

- {validation}

## Observability

### Metrics

- {metric 1}

### Logging

- {logging 1}

## Privacy and Security

- {security 1}

## Deployment

- {deployment 1}

## API

The API has the following endpoints:

1. `{path}` - A `{HTTP method}` endpoint {description}.

## Style Guide

Our organization follows these principles for development.

- DRY

- SOLID

- TDD

Our company follows these coding styles and best practices:

- {style 1}Great! Let's begin the process of designing the software application.

Step 1: Discuss problem context

Could you please provide some details about the problem that the application needs to solve? It would be helpful to understand the business domain, target users, and any specific pain points or goals that the application aims to address.

Okay, it picked up on the process and is guiding us.

I gave it the first bit of our URL shortener coding challenge:

This challenge is to build your own URL shortening service. Think bit.ly or tinyurl.com.

Basically it’s a service that lets a client submit a long URL which is then shortened to make it easier to use. For example:

https://www.amazon.com/Rust-Programming-Language-2nd/dp/1718503105/ref=sr_1_1?crid=3977W67XGQPJR&keywords=the+rust+programming+language&qid=1685542718&sprefix=the+%2Caps%2C3079&sr=8-1

could become: https://tinyurl.com/bdds8utd

This is typically done with a web based user interface, that let’s users enter a long URL and get back a shortened version of the URL. For example:

The shortening service then keeps a record of the short code, i.e. bdds8utd in the example above and the long URL that it maps to. Then when a client requests the shortened URL the service returns a HTTP redirect code, sending them to the long URL.Thank you for providing the problem context. I understand that we need to build a URL shortening service that takes a long URL as input and provides a shortened version of the URL as output. The service should also store a mapping between the shortened URL and the corresponding long URL. When a client accesses the shortened URL, they should be redirected to the original long URL.

Let's move on to the next step.

Step 2: Discuss use cases

Can you provide some specific use cases or scenarios that users might encounter when using the URL shortening service?

I could think up use cases here, but let’s see what ChatGPT thinks:

What use cases do you foresee?

Shorten URL: Users can input a long URL into the service and request a shortened URL.

Retrieve Short URL: Users can retrieve the shortened URL associated with a specific long URL.

Redirect to Long URL: When users access a shortened URL, they are redirected to the original long URL.

Analytics and Statistics: The service can provide analytics and statistics on the usage of the shortened URLs, such as the number of clicks or the geographical location of the users.

Analytics is a realistic business need, but it’s too much work for our example.

We will not worry about analytics in this version. Let's continue with those use cases.Understood.

Let's proceed to the next step.

Step 3: Discuss functional requirements

From the use cases we discussed, here are the functional requirements:

Shorten URL

The service should accept a long URL from the user.

It should generate a unique shortened URL for the given long URL.

The mapping between the shortened URL and the long URL should be stored in the system.

The shortened URL should be returned to the user.

Retrieve Short URL

The service should allow users to retrieve the original long URL associated with a specific shortened URL.

Redirect to Long URL

When a user accesses a shortened URL, the service should redirect them to the corresponding long URL.

Cool. This time ChatGPT anticipated functional requirements.

Let’s throw in some more:

- The code should be short, less than 10 chars

- The API should be a JSON API

- When visiting a short URL that is not found, the response should be an HTML error page with an appropriate HTTP status code and an error message instead of JSONGreat! I've added the additional functional requirements you mentioned

Step 4: Discuss non-functional requirements

In addition to the functional requirements, we should also consider the non-functional requirements that are important for the successful operation and performance of the URL shortening service.

💡 Don’t worry. I’m not going to paste all the output. I wanted to give you a sense that it does (more or less) know what it’s talking about. I’ll summarize the conversation more from here on out.

ChatGPT wants to jump to listing non-functional requirements. I would like to brainstorm here. Our traffic needs will dictate some non-functional requirements, so we’ll start there.

Let's estimate how much traffic we will have and how many URLs we need to store. How much traffic should we expect?

Daily Traffic: Assume a moderate number of users initially, such as 1000 requests per day.

Request Rate: Assuming a uniform distribution of requests throughout the day, we can estimate around 70 requests per hour (1000 requests / 24 hours).

Peak Traffic: Consider the possibility of occasional spikes in traffic, especially during peak usage times. We can assume a peak traffic rate of 2-3 times the average request rate, resulting in around 140-210 requests per hour during peak periods.

🥱 This is a very modest traffic load. It’s probably realistic for a service with no marketing budget, but it would be hard to code something that doesn’t meet these needs. Let’s spice it up a little bit.

I asked my coding partner some leading questions to figure out what we could handle on a modest budget instead.

Let's work backwards from storage to see what capacity we could support. We will store the data in-memory in Redis. We only want to use 2GB of RAM. How many URLs can we store?

Assume each URL entry requires 100 bytes of memory. This includes the long URL, the shortened URL, and any additional metadata.

Considering the 2GB RAM constraint, we have approximately 2,000,000,000 bytes available.

Hm 🤔. I’m no mathematician magician, but that looks off. ChatGPT 3.5 seems to have issues doing math calculations. I will skip over the glaring calculation detail for now and address the more significant issue first.

100 bytes seems short for a URL shortener. Likely users will want to shorten much longer URLs. What should we plan for for long URLs?So, with an estimated average URL entry size of 500 bytes, we can store approximately 4 million URLs in Redis while utilizing 2GB of RAM.

Okay. The math checks out this time. But 500 seems arbitrary. I’ll guide ChatGPT to a more informed choice with some leading questions.

What is the maximum URL length?The maximum length of a URL is not strictly defined by any standard or specification.

For practical purposes, it's recommended to consider keeping URLs reasonably concise and within a reasonable length limit, typically around 2,000 to 4,000 characters.

Do web browsers impose a limit?Yes, web browsers do impose a limit on the length of URLs they can handle. This limit is set by the individual browsers and can vary across different browser versions.

The maximum URL length that browsers can handle typically ranges from 2,000 to 8,000 characters.

👀 My independent research of Googling things and clicking on random StackOverflow links says various degrees of “some browsers support a ridiculously long URL, but you’ll have the most compatibility with around 2,000 characters.”

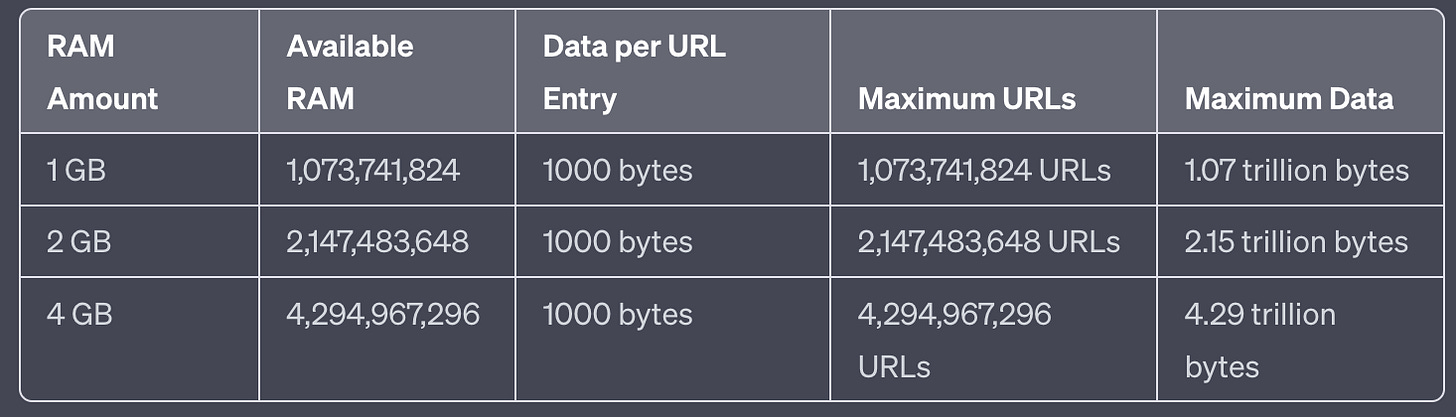

So I make a judgment call of using 1000 bytes on average for our calculations.

ChatGPT 3.5 can often be wrong on calculations. It helps to ask for more detail in tables to see if it’s thinking correctly.

Let's plan for an average of 1000 bytes per URL. Give me a table of traffic and data estimates for this size of URL as well as the total RAM we'll need. We will want to allow room for spikes in traffic. Allow for a 10x spike.

Include the columns: scale, urls per hour, urls per day, urls per month, data per day, data per month

Show rows for different RAM amounts: 1 GB, 2 GB, 4 GB.Sure! Let's estimate the traffic and data requirements based on an average URL size of 1000 bytes, and also factor in spikes in traffic.

I didn’t bother checking if those numbers were correct. It’s not really the data I’m looking for. I want ChatGPT to figure out how many URLs the RAM can hold at a maximum.

Those numbers aren't reaching anywhere near the RAM limits. Let's figure out the maximum data we can handle for each RAM amount.Apologies for the confusion. Let's calculate the maximum data we can handle for each RAM amount, considering the URL size of 1000 bytes.

Okay. Back to bad math.

That does not add up. `1,073,741,824 bytes / 1000 bytes` is not 1,073,741,824Apologies for the error in the calculation. You're correct; the division was incorrect in the previous response.

I apologize for the confusion caused by the previous incorrect calculations.

The data is still not quite right. What I do notice is that ChatGPT 3.5 apologizes… a lot. It gets pretty annoying. It seems ChatGPT 4 apologizes less often. Maybe because it’s correct more often and does not need to.

I decide to take the coaching approach and slightly change to a leading direction:

How do we calculate how many URLs will fit in a given amount of RAM?For example, if the average URL size is 1000 bytes and you have 1 GB (1,073,741,824 bytes) of available RAM, the calculation would be:

Maximum URLs = 1,073,741,824 bytes / 1000 bytes = 1,073,741 URLs

Okay, so it knows what to do. Let’s see if it is set on the right path.

okay. Given that formula, update the table.Apologies for the confusion earlier.

🤦🏼 *facepalm*

I apologize for the confusion and any frustration caused. I understand the importance of accurate information in the design process.

At least it knows I’m frustrated.

For those of you bad at math like me, 2.15 trillion bytes is 2,105 GB — not 2 GB. I guess there’s that, I’m better at math than ChatGPT 3.5.

I simplify the problem for it.

We have 3 scales: Small (1GB RAM), Medium (2GB RAM), Large (4GB RAM)

Create a table with these columns: scale, urls, total data

Work backward from the total data, which must be no higher than the RAM amount. We want to figure out for a given RAM amount, how many URLs can we fit.Looks like it figured it out. We walked through some more calculations then I came to this conclusion:

If we want to keep data only in memory, we'll either need a lot more RAM or to only store data for a short amount of time.So I figure:

Let's be the SnapChat of URLs shorteners and only store the URLs for 24 hours. That should be long enough for quick sharing of URLs and allow us to use an in memory store like Redis. Right?Storing URLs for a short period of time aligns with the goal of a temporary and ephemeral URL shortening service, similar to the concept of SnapChat.

Not an enthusiastic endorsement, but I’ll take it.

Okay, let's plan for a max of ~200,000 URLs of capacity. Even though we

only store URLs for 24 hours, we want to make sure we do not repeat URLs for 10 years. How many possible URLs is that and what's the shortest short code we can use to represent that number?Let’s skip ahead.

We won't be storing the short codes, so we won't really know about duplicates for 10 years. Instead, we need to make it statistically unlikely that we'll hit a duplicate in that time frame. We do want to verify there are no duplicates for the 24-hour window we're storing data. We could use a random ID for the short code, base62 encoded. How many random bits do we need to avoid collisions for 10 years?⚠️ Spoiler alert. This does not go well. ⚠️

After much back in forth, I came to this conclusion:

Your math is off so I asked ChatGPT 4. Here's what we came up with:A 10 character short code would make collision very unlikely. I'd prefer smaller, so let's go with 8 chars. It's unlikely enough for normal traffic. We don't expect a 10x spike every day for 10 years.Thank you for providing the updated information and the calculations. I apologize for any confusion caused by the previous responses.

Yes, I cheated and asked ChatGPT 3.5’s more intelligent cousin, ChatGPT 4. But it did not take it hard; it ignored this and accepted the answer with an apology.

Now that the calculations are out of the way, we can do work that ChatGPT 3.5 is better suited for.

This discussion implied some new functional and non-functional requirements. What are they?Functional Requirements:

Generate Unique Short Codes: The system should generate unique short codes for each long URL to avoid collisions and ensure uniqueness within the desired time frame.

Store URLs for a Limited Duration: URLs should be stored in memory for a maximum duration of 24 hours, aligning with the temporary nature of the service.

Remove Expired URLs: The system should automatically remove expired URLs from storage after the designated 24-hour period.

Handle 10x Traffic Spike: The system should be able to handle a spike in traffic up to 10 times the normal volume to accommodate occasional high-demand periods.

Redirect to Long URLs: When a short URL is accessed, the system should redirect users to the corresponding long URL.

Non-Functional Requirements:

Collision Probability: The system should have a low collision probability for generated short codes, ensuring that the likelihood of duplicate codes is statistically unlikely.

Compact Short Codes: The short codes should be reasonably short in length, striking a balance between compactness and collision avoidance.

We carry on like this back and forth on several topics:

What is the next step in our process?

Okay, let's move on to the data model. What do we need to store?

For the use case "User submits the same long URL multiple times and receives the same short URL within the 24-hour duration.", we will need to store additional data.

This will add some additional storage for each URL. Considering the two mappings in our data model, how much will each URL take in space?

What do you think the API should be?

etc

I have to remind it:

Don't be so apologetic. We're working on this together.Thank you for your understanding and kind words! I appreciate your collaborative approach, and I'm here to assist you throughout the process. If you have any more questions, need further clarification, or require assistance with any aspect of the design, please don't hesitate to let me know. Let's continue working together to create a successful URL shortening service design.

You can see this is a conversation. ChatGPT and I are designing together. ChatGPT is informing me, and I’m making the decisions. Using the DACI model, I would be the Driver and the Approver, and ChatGPT would be a Contributor.

We went back and forth, converging on a design. If you’re interested, view the whole interaction.

And here’s a snippet of the design we came up with. We’ll use it in Part 3 and beyond.

# URL Shortening Service

## Context

This service provides APIs that take long URLs and provide a short URL suitable for easy sharing.

## Functional Requirements

- The JSON API should allow users to submit a long URL and receive a shortened URL in return.

- When visiting the shortened URL, the visitor will be redirected to the long URL.

- When visiting a short URL that is not found or has expired, the response should be an HTML error page with an appropriate HTTP status code and an error message. The error page should provide a user-friendly display.

- Short URLs are only valid for 24 hours. After the 24-hour validity window, a new short URL should be generated for the same long URL.

[truncated]

## Non-functional requirements

- Be able to handle generating and storing ~200,000 short URLs a day and up to 2,000,000 short URLs in a day during a 10x spike in URL generation.

- Use minimal memory, up to 2GB.

[truncated]

## Use Cases

- User submits a long URL and receives a short URL in response.

- User visits the short URL and gets redirected to the original long URL.

- User attempts to access an expired short URL and receives an appropriate response.

- User submits an invalid long URL and gets an error response.

[truncated]You can view the complete design document.

We can now use this design document to generate some code.

Next

Head to Part 3. Continue the journey.

Part 2: Design ← YOU ARE HERE

Part 4: Generating tests

Part 5: Generating the application and making it work